One Privacy Mistake Nearly Everyone Makes With ChatGPT (and how to fix it)

Your AI prompts may be stored in a place you’d never expect. Here’s how to fix it before it exposes you.

We’re breaking some privacy news here (we think).

If you use ChatGPT, go check your browser history. You might be shocked at what’s there.

While testing Proton’s Lumo AI for our last newsletter post (available here), we discovered something surprising:

if you use ChatGPT and some other AI chat tools in a browser (including Gemini), your prompts are saved in your browsing history.

That “private” chat where you asked about a sensitive health issue or a confidential work question? It’s easily accessible in your browser history like any regular web search query or visit to a website.

We ended up testing five popular Gen AI tools to evaluate this flaw. All had some variation of the flaw except for one. Keep reading to figure out the privacy winner, along with how to address the flaw in the others.

What is Happening?

While testing out Proton’s Lumo, we used a sensitive query: “STD Signs”.

In the course of our testing, we happened to discover the prompt shows up in our browser history.

We wondered - is this unique to Lumo? Or is it a wider problem?

Turns out, it’s a widescale problem with at least Lumo, ChatGPT, Perplexity and Gemini.

Note: We did not test Claude since they require a login. We did include DuckDuckGo since they have a standalone AI tool similar to ChatGPT. Brave Leo is excluded since their AI tool is embedded in their search engine.

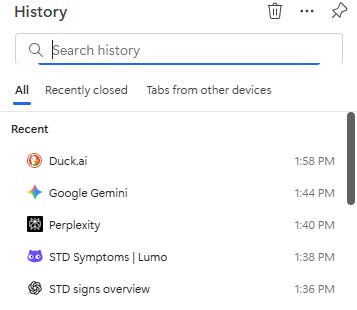

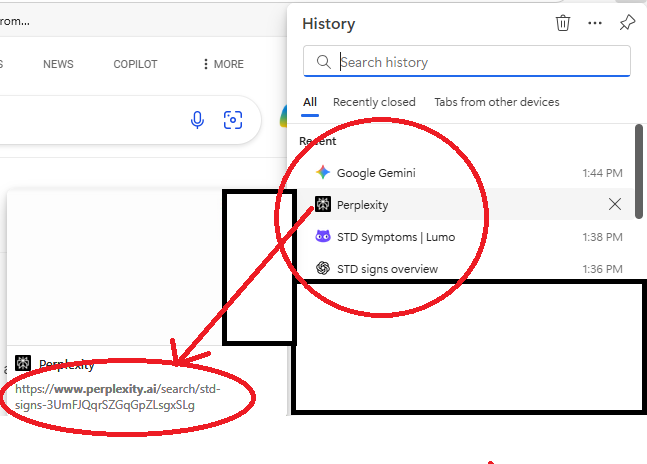

Here’s a snapshot of our general history. You can see that on a first look, only Lumo and ChatGPT reveal a summary of the prompt (not good!). Perplexity, Gemini and DuckDuckGo privatize the prompt in the history summary. But….

the prompt becomes visible for Gemini and Perplexity in two situations. One is if you hover over the entry. The other is if you click on the link, which takes you directly to the prompt.

Based on our test, DuckDuckGo completely privatizes the prompt in the history, both visually and when clicking on the link. It simply takes you to a blank chat page.

Well done, DuckDuckGo!

Why This Happens

First, this situation isn’t a data breach. It’s an intentional design choice (or oversight?) by these chat providers. We know that privatizing the prompt is possible since DuckDuckGo managed to do it.

Most AI chat tools generate unique URLs as you start a conversation, and the text of your prompt can get embedded in that URL. Your browser then saves the URL in its history, just like it would with any search engine query.

It’s easy to see why most users wouldn’t notice this. ChatGPT and other AI tools feel like self-contained apps, so you assume your prompts stay inside the chat window. In reality, some leave a local trail just like a Google search would.

What's interesting is this situation reveals how there are levels to privacy or at least what you might consider "private".

While nobody would qualify ChatGPT or Gemini as privacy friendly, users still have a certain expectation about the visibility of their chats. When logged in to ChatGPT or Gemini, you may think that it’s "only" OpenAI or Google that know what you're typing, not the public or your friends or significant other.

But as we discovered, that’s not the case. Now we know those "private" chats can show up in your browsing history.

Is This Worth Caring About?

For many people, this may feel trivial. “So what? My browser history is private.” But there are important reasons you should care:

Shared devices: If you use a family computer, a shared tablet, or a work machine, anyone with access can see your prompts. A single prompt could reveal personal health questions, confidential work details, or information about your financial life.

Bigger attack surface: Every copy of your data is a potential vulnerability. If your device is ever lost, stolen, or hacked, a detailed browser history full of AI prompts becomes a goldmine. Prompts often contain context that attackers can use for targeted phishing, identity theft, or even blackmail.

By some estimates, nearly 50% of all browser extensions pose a security risk or are outright malware. As the value of prompt data increases, look for these extensions to start targeting AI chat data included in user browser history, which in the extreme, could be used for blackmail purposes. For more background on the dangers of poorly vetted browser extensions, check this post out by Jason Rowe.

Expectation gap: AI chat tools feel private. They look and act like messaging apps, so you’re more likely to share personal details you’d never type into a search engine. As we have reported before, people are talking to these AI bots like therapists and girlfriends. That misplaced sense of privacy can lead you to overshare. Once that information is in your browser history, it’s exposed to risks you probably never considered.

The Skeptic's Take

You could argue this is no different than a regular Google search, and that’s partly true. Your browser history is private by default, and you can always clear it. Plus, the AI provider already has your prompts.

But here’s the difference: users don’t expect chat prompts to leave the chat window. That expectation gap may lead people to share far more sensitive information than they might in a search bar. And that’s why this matters.

How to Protect Yourself

There’s no single perfect solution, but you can reduce your exposure with one of these approaches:

Use the official desktop or mobile app. Native apps don’t generate browser history trails (more on this in the next section)

Use a private browsing mode. Incognito or Private Mode prevents your browser from saving session history.

Clear your history regularly. If you prefer the browser version, make it a habit to clear your history and cache.

Use a dedicated browser profile. Keep AI tools in a separate profile you can wipe easily and control better.

Our recommended approach? A combination of #2 and #4.

This would mean running your browser based Gen AI searches in private browsing mode, both in desktop and mobile. That will turn off your browser history by default and avoid contaminating your regular browsing history. You could even use Brave for your regular browsing and a different browser for your AI searches. There are a few different permutations that get you more or less to the same spot.

Bonus: we also recommend further compartmentalizing your AI chat uses as follows:

Use a mainstream Gen AI tool (like ChatGPT or Claude) for your high value work. This is a situation where you need to retain your prompt history for future use. Like coding or writing. You are logged in to ChatGPT in this situation (so your prompts and output are saved).

Use Brave Leo (or your preferred private AI tool) for your more sensitive or general searches. For example, medical questions and "embarrassing" things. Really, anything that's personal so it's not tied to you and used to build or augment a profile. You are not logged into an account here and you're either clearing your history regularly or using private browsing mode.

What About Our Usual “Use the Browser, Not the App” Advice?

Our standard recommendation is to use the browser version of an app whenever possible because installed apps typically request more permissions and track more data. But AI chat tools are a unique case.

Browser version: Lower device-level risk, but leaves a local trail.

Native app: Higher corporate surveillance risk, but no local history artifacts.

Which option you choose comes down to the sensitivity of your prompts and your risk tolerance. If you’re typing anything you wouldn’t want someone else to stumble upon, the app may be the better move.

Bottom line

AI chat tools are powerful, but they’re still built on the same web infrastructure that logs everything you do. Don’t assume your prompts are private just because the interface feels like a closed system.

A few small tweaks, like using incognito mode or clearing your history, can close this gap and keep your sensitive prompts out of sight.

And if this discovery surprised you, there’s a good chance other personal data may be exposed in ways you haven’t thought about. Our guide, How Exposed Are You Online?, helps you find out exactly what’s floating around about you, and what to do about it.

Friendly Ask

If you know someone who uses ChatGPT or another Gen AI tool, share this post to help them keep their sensitive prompts private. They’ll thank you and you will look like a hero.

Looking for help with a privacy issue or privacy concern? Chances are we’ve covered it already or will soon. Follow us on X and LinkedIn for updates on this topic and other internet privacy related topics.

Disclaimer: None of the above is to be deemed legal advice of any kind. These are *opinions* written by a privacy and tech attorney with years of working for, with and against Big Tech and Big Data. And this post is for informational purposes only and is not intended for use in furtherance of any unlawful activity. This post may also contain affiliate links, which means that at no additional cost to you, we earn a commission if you click through and make a purchase.

AI scams are here and getting more sophisticated. One of the best things you can do to protect yourself is to remove your personal information from Google and the data broker sites. That starves the scammers of vital information, making you a much harder target. You can DIY, or pay a reasonable fee to DeleteMe to do it for you. Sign up today and get 20% off using our affiliate link here. We’ve used DeleteMe for almost five years and love it for the peace of mind. It’s also a huge time saver and an instant privacy win.

Check out our specialized privacy and security guides in our digital shop. Below is a sample of what’s available. People are really loving the De-Google your Life Guide (available here at 25% off). Browse all the guides here.

Privacy freedom is more affordable than you think. We tackle the top Big Tech digital services and price out privacy friendly competitors here. The results may surprise you.

If you’re reading this but haven’t yet signed up, join for free (2.6K+ subscribers strong) and get our newsletter delivered to your inbox by subscribing here 👇

Unrelated to this but in your area of interest. Check BBC article on the UK enforced rules on Wikipedia